Top Related Projects

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Efficient 3D human pose estimation in video using 2D keypoint trajectories

Official implementation of CVPR2020 paper "VIBE: Video Inference for Human Body Pose and Shape Estimation"

Expressive Body Capture: 3D Hands, Face, and Body from a Single Image

Quick Overview

EasyMocap is an open-source toolbox for markerless human motion capture from multi-view video inputs. It provides a comprehensive pipeline for 3D human pose and shape estimation, including multi-person tracking, body mesh recovery, and motion capture. The project aims to make motion capture accessible and easy to use for researchers and practitioners in computer vision and graphics.

Pros

- Supports various input formats, including multi-view videos and images

- Offers both single-person and multi-person motion capture capabilities

- Provides a complete pipeline from 2D detection to 3D reconstruction

- Includes tools for data preprocessing, visualization, and evaluation

Cons

- Requires multiple camera views for optimal performance

- May have limitations in handling complex occlusions or crowded scenes

- Depends on pre-trained models, which may not generalize well to all scenarios

- Installation and setup process can be complex for beginners

Code Examples

- Loading and visualizing a 3D human mesh:

from easymocap.smplmodel import SMPLModel

from easymocap.mytools.vis_base import plot_meshes

# Load SMPL model

model = SMPLModel()

# Generate a sample pose and shape

pose = np.zeros(72)

shape = np.zeros(10)

# Get vertices and faces

vertices = model(pose, shape)

faces = model.faces

# Visualize the mesh

plot_meshes(vertices, faces)

- Performing 2D keypoint detection on an image:

from easymocap.estimator import Detector2D

# Initialize the 2D detector

detector = Detector2D('hrnet')

# Load an image

image = cv2.imread('path/to/image.jpg')

# Detect 2D keypoints

keypoints = detector(image)

# Visualize the results

from easymocap.mytools.vis_base import plot_keypoints_2d

plot_keypoints_2d(image, keypoints)

- Running multi-view 3D reconstruction:

from easymocap.pipeline import mvpose

# Define camera parameters and input data

cameras = load_cameras('path/to/cameras.json')

images = load_images('path/to/images')

# Run multi-view 3D reconstruction

results = mvpose(images, cameras)

# Visualize the 3D poses

from easymocap.mytools.vis_base import plot_3d_poses

plot_3d_poses(results['poses3d'])

Getting Started

-

Install dependencies:

pip install -r requirements.txt -

Download pre-trained models:

bash scripts/download_models.sh -

Run the demo:

python apps/demo/run_mvpose.py --input path/to/videos --out_dir output -

Visualize results:

python apps/vis/vis_smpl.py --input output/smpl --out_dir output/render

Competitor Comparisons

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Pros of OpenPose

- More mature and widely adopted in the research community

- Supports real-time multi-person keypoint detection

- Offers pre-trained models for various body parts (face, hand, foot)

Cons of OpenPose

- Requires more computational resources

- Less flexible for customization and extension

- Limited to 2D pose estimation without built-in 3D reconstruction

Code Comparison

OpenPose:

from openpose import pyopenpose as op

params = dict(model_folder="./models/")

opWrapper = op.WrapperPython()

opWrapper.configure(params)

opWrapper.start()

datum = op.Datum()

opWrapper.emplaceAndPop(op.VectorDatum([datum]))

EasyMocap:

from easymocap.estimator import BaseEstimator

estimator = BaseEstimator(cfg)

keypoints2d, scores = estimator(image)

EasyMocap provides a more streamlined API for pose estimation, while OpenPose offers more granular control over the process. EasyMocap is designed for easier integration into 3D motion capture pipelines, whereas OpenPose focuses on robust 2D keypoint detection across various scenarios.

Efficient 3D human pose estimation in video using 2D keypoint trajectories

Pros of VideoPose3D

- More advanced 3D pose estimation, especially for complex motions

- Better handling of occlusions and challenging camera angles

- Extensive documentation and research backing

Cons of VideoPose3D

- Higher computational requirements

- Steeper learning curve for implementation

- Less flexibility for multi-view setups

Code Comparison

EasyMocap:

from easymocap.dataset import CONFIG

from easymocap.smplmodel import SMPLModel

dataset = MviewDataset(path, cams, out)

smpl = SMPLModel(model_path)

VideoPose3D:

from common.arguments import parse_args

from common.camera import *

from common.model import *

args = parse_args()

model_pos = TemporalModel(args.num_joints, 2, args.num_joints, filter_widths, causal=args.causal)

EasyMocap focuses on simplicity and ease of use, with a more straightforward API for multi-view setups. VideoPose3D offers more advanced features and customization options, but requires more setup and understanding of its architecture. Both projects aim to provide 3D human pose estimation, but cater to different use cases and expertise levels.

Official implementation of CVPR2020 paper "VIBE: Video Inference for Human Body Pose and Shape Estimation"

Pros of VIBE

- More advanced temporal modeling using LSTM for smoother motion estimation

- Supports both single-view and multi-view input

- Provides pre-trained models for quick deployment

Cons of VIBE

- Higher computational requirements due to complex architecture

- Less flexibility in terms of customization and fine-tuning

- Steeper learning curve for beginners

Code Comparison

VIBE example:

vibe = VIBE_Demo(args.cfg, args.checkpoint)

output = vibe.run(video_file)

EasyMocap example:

mocap = EasyMocap(cfg)

output = mocap.run(video_file)

Both repositories aim to perform 3D human pose estimation, but VIBE focuses on video-based estimation with temporal coherence, while EasyMocap offers a more versatile toolkit for various mocap scenarios. VIBE excels in producing smooth, temporally consistent results, making it suitable for animation and motion analysis. EasyMocap, on the other hand, provides a more comprehensive set of tools for different capture setups and is generally easier to adapt to specific use cases.

Expressive Body Capture: 3D Hands, Face, and Body from a Single Image

Pros of SMPLify-X

- More advanced body model (SMPL-X) that includes face and hand details

- Supports estimation of body shape parameters

- Provides more detailed pose estimation, including finger movements

Cons of SMPLify-X

- More complex setup and dependencies

- Slower processing time due to increased model complexity

- Requires more computational resources

Code Comparison

SMPLify-X:

# Fit SMPL-X model to observations

smplx_output = smplx_model(betas=betas, body_pose=body_pose, global_orient=global_orient)

vertices = smplx_output.vertices

joints = smplx_output.joints

EasyMocap:

# Fit SMPL model to observations

smpl_output = smpl_model(poses=poses, betas=betas, trans=trans)

vertices = smpl_output.vertices

joints = smpl_output.joints

Both repositories aim to perform 3D human pose estimation, but SMPLify-X offers more detailed results at the cost of increased complexity and computational requirements. EasyMocap provides a simpler, more accessible approach that may be sufficient for many applications. The code snippets illustrate the similar structure but highlight the additional parameters and complexity in SMPLify-X for handling the more advanced SMPL-X model.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

EasyMocap is an open-source toolbox for markerless human motion capture and novel view synthesis from RGB videos. In this project, we provide a lot of motion capture demos in different settings.

News

- :tada: Our SIGGRAPH 2022 Novel View Synthesis of Human Interactions From Sparse Multi-view Videos is released! Check the documentation.

- :tada: EasyMocap v0.2 is released! We support motion capture from Internet videos. Please check the Quick Start for more details.

Core features

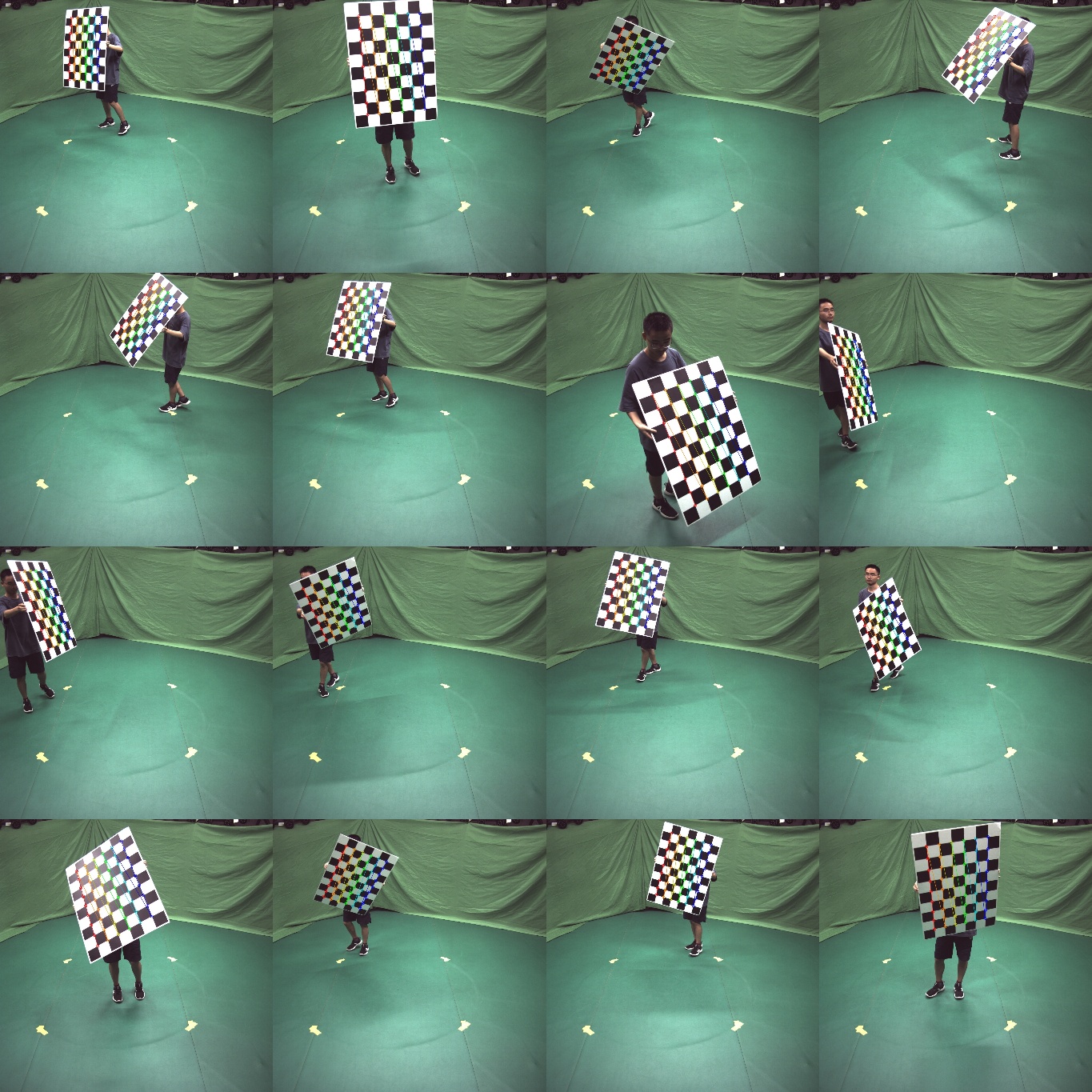

Multiple views of a single person

This is the basic code for fitting SMPL1/SMPL+H2/SMPL-X3/MANO2 model to capture body+hand+face poses from multiple views.

Videos are from ZJU-MoCap, with 23 calibrated and synchronized cameras.

Captured with 8 cameras.

Internet video

This part is the basic code for fitting SMPL1 with 2D keypoints estimation45 and CNN initialization6.

Internet video with a mirror

Multiple Internet videos with a specific action (Coming soon)

Internet videos of Roger Federer's serving

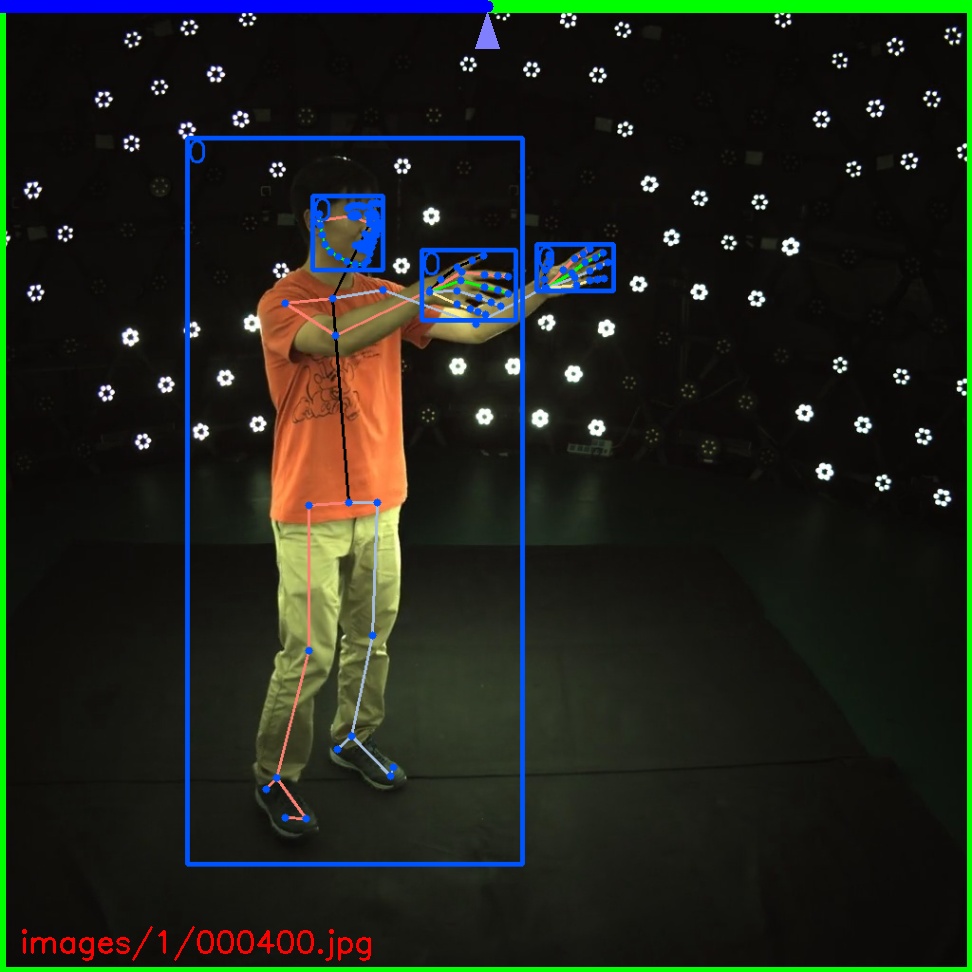

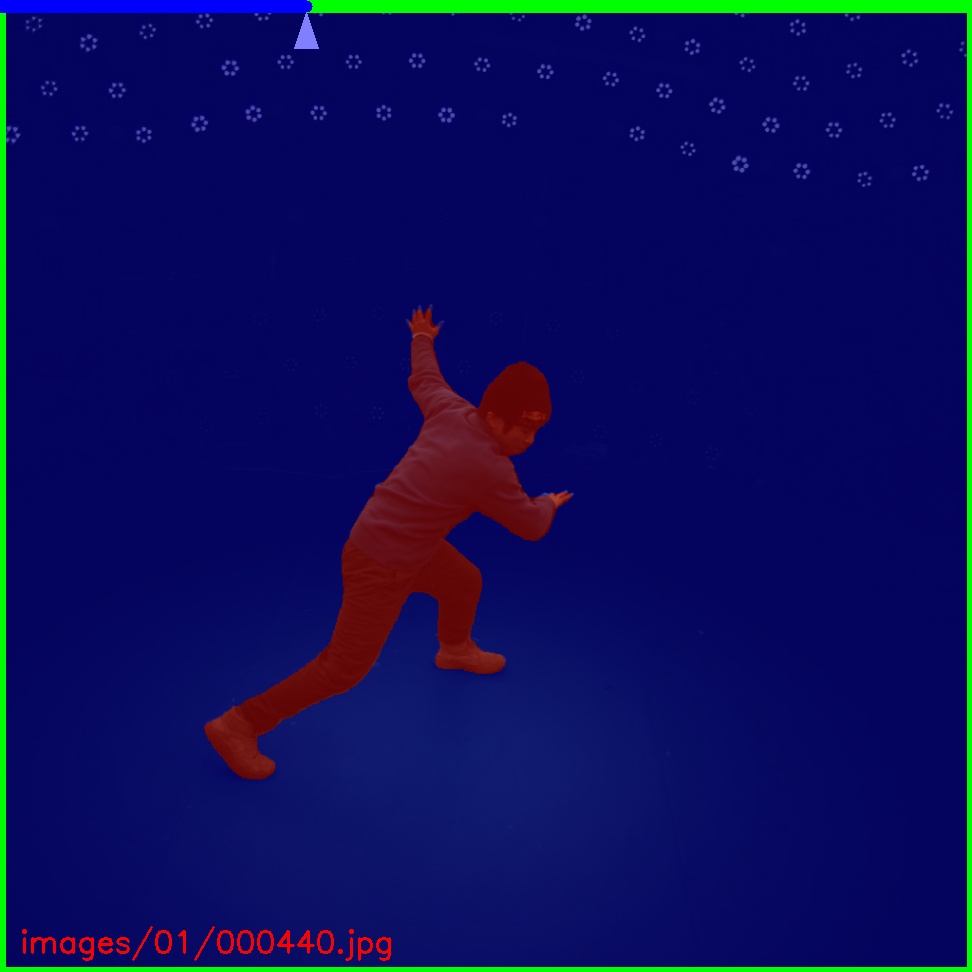

Multiple views of multiple people

Captured with 8 consumer cameras

Novel view synthesis from sparse views

Novel view synthesis for chanllenge motion(coming soon)

Novel view synthesis for human interaction

ZJU-MoCap

With our proposed method, we release two large dataset of human motion: LightStage and Mirrored-Human. See the website for more details.

If you would like to download the ZJU-Mocap dataset, please sign the agreement, and email it to Qing Shuai (s_q@zju.edu.cn) and cc Xiaowei Zhou (xwzhou@zju.edu.cn) to request the download link.

LightStage: captured with LightStage system

Mirrored-Human: collected from the Internet

Many works have achieved wonderful results based on our dataset:

- Real-time volumetric rendering of dynamic humans

- CVPR2022: HumanNeRF: Free-viewpoint Rendering of Moving People from Monocular Video

- ECCV2022: KeypointNeRF: Generalizing Image-based Volumetric Avatars using Relative Spatial Encoding of Keypoints

- SIGGRAPH 2022 paper Drivable Volumetric Avatars using Texel-Aligned Features

Other features

3D Realtime visualization

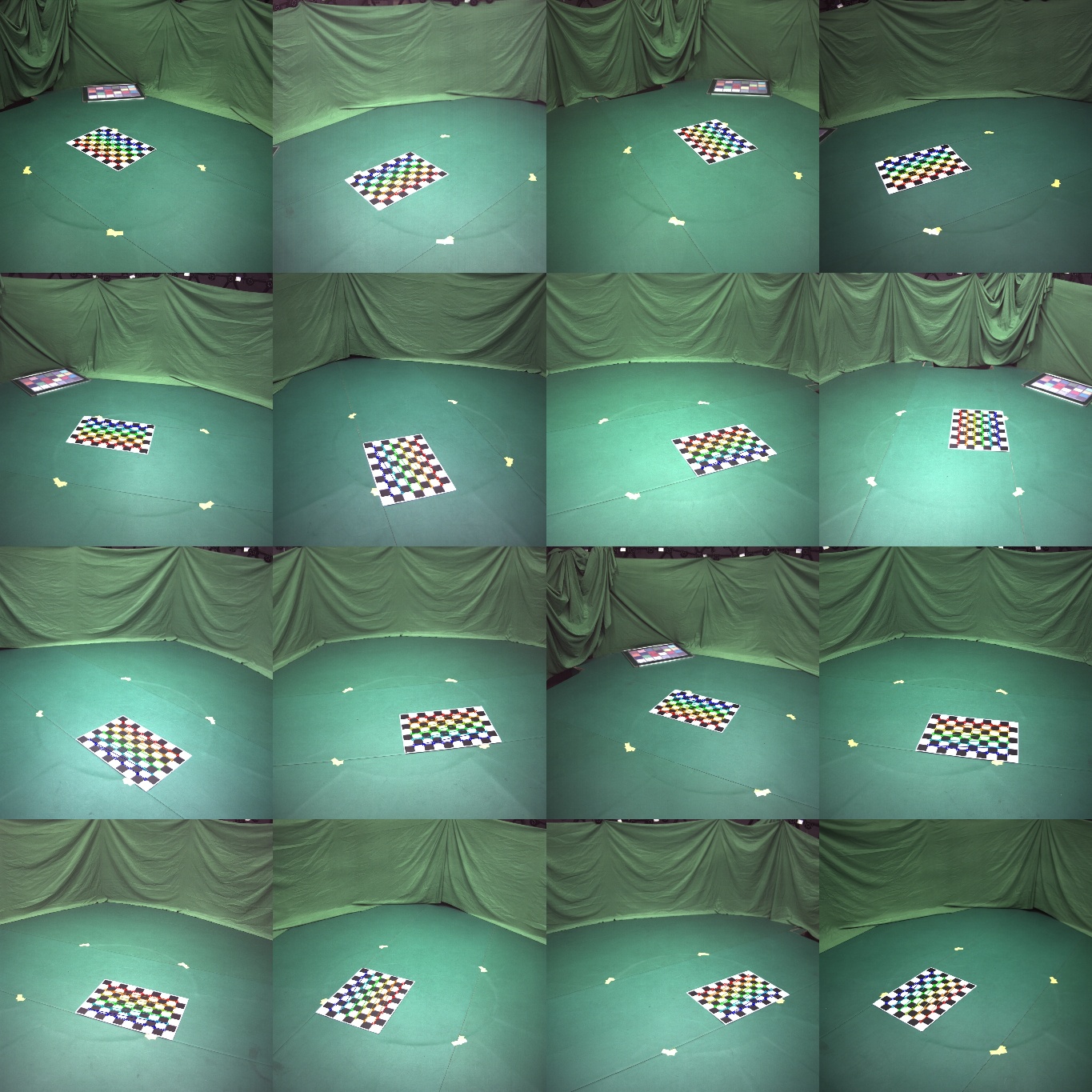

Camera calibration

Calibration for intrinsic and extrinsic parameters

Annotator

Annotator for bounding box, keypoints and mask

Updates

- 11/03/2022: Support MultiNeuralBody.

- 12/25/2021: Support mediapipe keypoints detector.

- 08/09/2021: Add a colab demo here.

- 06/28/2021: The Multi-view Multi-person part is released!

- 06/10/2021: The real-time 3D visualization part is released!

- 04/11/2021: The calibration tool and the annotator are released.

- 04/11/2021: Mirrored-Human part is released.

Installation

See documentation for more instructions.

Acknowledgements

Here are the great works this project is built upon:

- SMPL models and layer are from MPII SMPL-X model.

- Some functions are borrowed from SPIN, VIBE, SMPLify-X

- The method for fitting 3D skeleton and SMPL model is similar to SMPLify-X(with 3D keypoints loss), TotalCapture(without using point clouds).

- We integrate some easy-to-use functions for previous great work:

Contact

Please open an issue if you have any questions. We appreciate all contributions to improve our project.

Contributor

EasyMocap is built by researchers from the 3D vision group of Zhejiang University: Qing Shuai, Qi Fang, Junting Dong, Sida Peng, Di Huang, Hujun Bao, and Xiaowei Zhou.

We would like to thank Wenduo Feng, Di Huang, Yuji Chen, Hao Xu, Qing Shuai, Qi Fang, Ting Xie, Junting Dong, Sida Peng and Xiaopeng Ji who are the performers in the sample data. We would also like to thank all the people who has helped EasyMocap in any way.

Citation

This project is a part of our work iMocap, Mirrored-Human, mvpose, Neural Body, MultiNeuralBody, enerf.

Please consider citing these works if you find this repo is useful for your projects.

@Misc{easymocap,

title = {EasyMoCap - Make human motion capture easier.},

howpublished = {Github},

year = {2021},

url = {https://github.com/zju3dv/EasyMocap}

}

@inproceedings{shuai2022multinb,

title={Novel View Synthesis of Human Interactions from Sparse

Multi-view Videos},

author={Shuai, Qing and Geng, Chen and Fang, Qi and Peng, Sida and Shen, Wenhao and Zhou, Xiaowei and Bao, Hujun},

booktitle={SIGGRAPH Conference Proceedings},

year={2022}

}

@inproceedings{lin2022efficient,

title={Efficient Neural Radiance Fields for Interactive Free-viewpoint Video},

author={Lin, Haotong and Peng, Sida and Xu, Zhen and Yan, Yunzhi and Shuai, Qing and Bao, Hujun and Zhou, Xiaowei},

booktitle={SIGGRAPH Asia Conference Proceedings},

year={2022}

}

@inproceedings{dong2021fast,

title={Fast and Robust Multi-Person 3D Pose Estimation and Tracking from Multiple Views},

author={Dong, Junting and Fang, Qi and Jiang, Wen and Yang, Yurou and Bao, Hujun and Zhou, Xiaowei},

booktitle={T-PAMI},

year={2021}

}

@inproceedings{dong2020motion,

title={Motion capture from internet videos},

author={Dong, Junting and Shuai, Qing and Zhang, Yuanqing and Liu, Xian and Zhou, Xiaowei and Bao, Hujun},

booktitle={European Conference on Computer Vision},

pages={210--227},

year={2020},

organization={Springer}

}

@inproceedings{peng2021neural,

title={Neural Body: Implicit Neural Representations with Structured Latent Codes for Novel View Synthesis of Dynamic Humans},

author={Peng, Sida and Zhang, Yuanqing and Xu, Yinghao and Wang, Qianqian and Shuai, Qing and Bao, Hujun and Zhou, Xiaowei},

booktitle={CVPR},

year={2021}

}

@inproceedings{fang2021mirrored,

title={Reconstructing 3D Human Pose by Watching Humans in the Mirror},

author={Fang, Qi and Shuai, Qing and Dong, Junting and Bao, Hujun and Zhou, Xiaowei},

booktitle={CVPR},

year={2021}

}

Footnotes

-

Loper, Matthew, et al. "SMPL: A skinned multi-person linear model." ACM transactions on graphics (TOG) 34.6 (2015): 1-16. ↩ ↩2

-

Romero, Javier, Dimitrios Tzionas, and Michael J. Black. "Embodied hands: Modeling and capturing hands and bodies together." ACM Transactions on Graphics (ToG) 36.6 (2017): 1-17. ↩ ↩2

-

Pavlakos, Georgios, et al. "Expressive body capture: 3d hands, face, and body from a single image." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019. ↩

-

Cao, Z., Hidalgo, G., Simon, T., Wei, S.E., Sheikh, Y.: Openpose: real-time multi-person 2d pose estimation using part affinity fields. arXiv preprint arXiv:1812.08008 (2018) ↩ ↩2

-

Sun, Ke, et al. "Deep high-resolution representation learning for human pose estimation." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019. ↩

-

Kolotouros, Nikos, et al. "Learning to reconstruct 3D human pose and shape via model-fitting in the loop." Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019 ↩ ↩2

-

Bochkovskiy, Alexey, Chien-Yao Wang, and Hong-Yuan Mark Liao. "Yolov4: Optimal speed and accuracy of object detection." arXiv preprint arXiv:2004.10934 (2020). ↩

Top Related Projects

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Efficient 3D human pose estimation in video using 2D keypoint trajectories

Official implementation of CVPR2020 paper "VIBE: Video Inference for Human Body Pose and Shape Estimation"

Expressive Body Capture: 3D Hands, Face, and Body from a Single Image

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot