Top Related Projects

Robust Speech Recognition via Large-Scale Weak Supervision

Port of OpenAI's Whisper model in C/C++

WhisperX: Automatic Speech Recognition with Word-level Timestamps (& Diarization)

High-performance GPGPU inference of OpenAI's Whisper automatic speech recognition (ASR) model

Faster Whisper transcription with CTranslate2

Quick Overview

Whisper.cpp is a C++ port of OpenAI's Whisper automatic speech recognition (ASR) model. It provides efficient CPU inference of Whisper, allowing for fast and accurate transcription of audio without requiring a GPU. This project aims to make Whisper more accessible and performant on a wider range of devices.

Pros

- High performance on CPU, enabling fast transcription without specialized hardware

- Smaller memory footprint compared to the original Python implementation

- Cross-platform compatibility, supporting various operating systems and architectures

- Active development and community support

Cons

- Limited to inference only; training or fine-tuning is not supported

- May have slight accuracy differences compared to the original Whisper implementation

- Requires manual model conversion from the original Whisper format

- Limited to C++ usage, which may be less accessible for some developers compared to Python

Code Examples

- Basic transcription:

#include "whisper.h"

int main() {

struct whisper_context * ctx = whisper_init_from_file("ggml-base.en.bin");

whisper_full_params params = whisper_full_default_params(WHISPER_SAMPLING_GREEDY);

whisper_full(ctx, params, "audio.wav", nullptr, nullptr);

const int n_segments = whisper_full_n_segments(ctx);

for (int i = 0; i < n_segments; ++i) {

const char * text = whisper_full_get_segment_text(ctx, i);

printf("%s", text);

}

whisper_free(ctx);

return 0;

}

- Streaming transcription:

#include "whisper.h"

int main() {

struct whisper_context * ctx = whisper_init_from_file("ggml-base.en.bin");

whisper_full_params params = whisper_full_default_params(WHISPER_SAMPLING_GREEDY);

params.print_realtime = true;

params.print_progress = false;

whisper_full(ctx, params, "audio.wav", nullptr, nullptr);

whisper_free(ctx);

return 0;

}

- Language detection:

#include "whisper.h"

int main() {

struct whisper_context * ctx = whisper_init_from_file("ggml-base.bin");

whisper_full_params params = whisper_full_default_params(WHISPER_SAMPLING_GREEDY);

params.language = "auto";

whisper_full(ctx, params, "audio.wav", nullptr, nullptr);

const char * detected_language = whisper_lang_str(whisper_full_lang_id(ctx));

printf("Detected language: %s\n", detected_language);

whisper_free(ctx);

return 0;

}

Getting Started

-

Clone the repository:

git clone https://github.com/ggerganov/whisper.cpp.git -

Build the project:

cd whisper.cpp make -

Download a Whisper model (e.g., ggml-base.en.bin) from the provided links in the repository.

-

Run the example:

./main -m models/ggml-base.en.bin -f samples/jfk.wav

This will transcribe the provided audio sample using the specified model.

Competitor Comparisons

Robust Speech Recognition via Large-Scale Weak Supervision

Pros of Whisper

- Developed and maintained by OpenAI, ensuring high-quality and cutting-edge features

- Supports a wide range of languages and accents

- Offers more advanced features like language detection and translation

Cons of Whisper

- Requires more computational resources and has higher system requirements

- Slower inference speed, especially on CPU-only systems

- More complex to set up and use, particularly for beginners

Code Comparison

Whisper (Python):

import whisper

model = whisper.load_model("base")

result = model.transcribe("audio.mp3")

print(result["text"])

whisper.cpp (C++):

#include "whisper.h"

whisper_context * ctx = whisper_init_from_file("ggml-base.en.bin");

whisper_full_default(ctx, params, "audio.wav", nullptr, nullptr);

whisper_print_timings(ctx);

whisper_free(ctx);

whisper.cpp is a C/C++ port of Whisper, optimized for efficiency and lower resource usage. It's faster on CPU-only systems and easier to integrate into embedded or mobile applications. However, it may lack some advanced features of the original Whisper implementation and might not support as wide a range of languages and accents.

Port of OpenAI's Whisper model in C/C++

Pros of whisper.cpp

- Efficient C++ implementation for faster processing

- Smaller memory footprint compared to Python version

- Cross-platform compatibility

Cons of whisper.cpp

- May lack some features present in the original Python implementation

- Potentially more complex setup for non-C++ developers

- Could have fewer community contributions due to language barrier

Code Comparison

whisper.cpp:

int main(int argc, char ** argv) {

whisper_context * ctx = whisper_init_from_file("ggml-base.en.bin");

whisper_full_params params = whisper_full_default_params(WHISPER_SAMPLING_GREEDY);

whisper_full(ctx, params, pcmf32.data(), pcmf32.size());

whisper_print_timings(ctx);

whisper_free(ctx);

return 0;

}

Both repositories are actually the same project, so there isn't a direct code comparison to be made. The whisper.cpp project is a C/C++ implementation of OpenAI's Whisper automatic speech recognition (ASR) model, focusing on efficiency and portability. It allows for running Whisper inference on various platforms, including mobile devices and web browsers, with optimized performance.

WhisperX: Automatic Speech Recognition with Word-level Timestamps (& Diarization)

Pros of WhisperX

- Offers advanced features like word-level timestamps and speaker diarization

- Provides improved accuracy through additional language models and alignment techniques

- Supports multiple audio processing backends (PyAV, FFmpeg)

Cons of WhisperX

- Requires more computational resources due to additional processing steps

- May have slower inference times compared to the lightweight whisper.cpp

- Depends on Python and additional libraries, potentially limiting portability

Code Comparison

whisper.cpp:

int whisper_full(

struct whisper_context * ctx,

struct whisper_full_params params,

const float * samples,

int n_samples

) {

// Simplified C implementation

}

WhisperX:

def transcribe(

audio,

model,

language=None,

task="transcribe",

vad=True,

**kwargs

):

# Python implementation with additional features

The code comparison highlights the difference in implementation languages and complexity. whisper.cpp uses C for a lightweight and efficient approach, while WhisperX leverages Python for more advanced features and easier integration with machine learning libraries.

High-performance GPGPU inference of OpenAI's Whisper automatic speech recognition (ASR) model

Pros of Whisper

- Optimized for Windows and DirectCompute, potentially offering better performance on Windows systems

- Includes a GUI application for easier use by non-technical users

- Supports real-time transcription with low latency

Cons of Whisper

- Limited cross-platform support compared to whisper.cpp

- Smaller community and potentially less frequent updates

- May have fewer language models available

Code Comparison

whisper.cpp:

// Load model

struct whisper_context * ctx = whisper_init_from_file("ggml-base.en.bin");

// Process audio

whisper_full_default(ctx, params, pcmf32.data(), pcmf32.size());

// Print result

const int n_segments = whisper_full_n_segments(ctx);

for (int i = 0; i < n_segments; ++i) {

const char * text = whisper_full_get_segment_text(ctx, i);

printf("%s", text);

}

Whisper:

// Load model

auto model = whisper::Model::create("ggml-base.en.bin");

// Process audio

auto result = model->process(pcmf32.data(), pcmf32.size());

// Print result

for (const auto& segment : result.segments) {

printf("%s", segment.text.c_str());

}

Both repositories implement the Whisper speech recognition model, but with different focuses. whisper.cpp aims for broad platform support and simplicity, while Whisper optimizes for Windows performance and includes a GUI. The code comparison shows similar high-level usage, with Whisper offering a slightly more modern C++ interface.

Faster Whisper transcription with CTranslate2

Pros of faster-whisper

- Optimized for speed, potentially offering faster transcription

- Supports streaming audio input for real-time transcription

- Provides more flexible API and configuration options

Cons of faster-whisper

- May have higher memory requirements due to optimization techniques

- Less portable than whisper.cpp, which is designed for broader compatibility

- Potentially more complex setup and integration process

Code Comparison

whisper.cpp:

#include "whisper.h"

int main() {

struct whisper_context * ctx = whisper_init_from_file("model.bin");

whisper_full(ctx, wparams, pcm, pcm_len, 0);

whisper_print_timings(ctx);

}

faster-whisper:

from faster_whisper import WhisperModel

model = WhisperModel("large-v2", device="cuda", compute_type="float16")

segments, info = model.transcribe("audio.mp3", beam_size=5)

for segment in segments:

print("[%.2fs -> %.2fs] %s" % (segment.start, segment.end, segment.text))

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

whisper.cpp

High-performance inference of OpenAI's Whisper automatic speech recognition (ASR) model:

- Plain C/C++ implementation without dependencies

- Apple Silicon first-class citizen - optimized via ARM NEON, Accelerate framework, Metal and Core ML

- AVX intrinsics support for x86 architectures

- VSX intrinsics support for POWER architectures

- Mixed F16 / F32 precision

- Integer quantization support

- Zero memory allocations at runtime

- Vulkan support

- Support for CPU-only inference

- Efficient GPU support for NVIDIA

- OpenVINO Support

- Ascend NPU Support

- Moore Threads GPU Support

- C-style API

- Voice Activity Detection (VAD)

Supported platforms:

- Mac OS (Intel and Arm)

- iOS

- Android

- Java

- Linux / FreeBSD

- WebAssembly

- Windows (MSVC and MinGW)

- Raspberry Pi

- Docker

The entire high-level implementation of the model is contained in whisper.h and whisper.cpp.

The rest of the code is part of the ggml machine learning library.

Having such a lightweight implementation of the model allows to easily integrate it in different platforms and applications. As an example, here is a video of running the model on an iPhone 13 device - fully offline, on-device: whisper.objc

https://user-images.githubusercontent.com/1991296/197385372-962a6dea-bca1-4d50-bf96-1d8c27b98c81.mp4

You can also easily make your own offline voice assistant application: command

https://user-images.githubusercontent.com/1991296/204038393-2f846eae-c255-4099-a76d-5735c25c49da.mp4

On Apple Silicon, the inference runs fully on the GPU via Metal:

https://github.com/ggml-org/whisper.cpp/assets/1991296/c82e8f86-60dc-49f2-b048-d2fdbd6b5225

Quick start

First clone the repository:

git clone https://github.com/ggml-org/whisper.cpp.git

Navigate into the directory:

cd whisper.cpp

Then, download one of the Whisper models converted in ggml format. For example:

sh ./models/download-ggml-model.sh base.en

Now build the whisper-cli example and transcribe an audio file like this:

# build the project

cmake -B build

cmake --build build -j --config Release

# transcribe an audio file

./build/bin/whisper-cli -f samples/jfk.wav

For a quick demo, simply run make base.en.

The command downloads the base.en model converted to custom ggml format and runs the inference on all .wav samples in the folder samples.

For detailed usage instructions, run: ./build/bin/whisper-cli -h

Note that the whisper-cli example currently runs only with 16-bit WAV files, so make sure to convert your input before running the tool.

For example, you can use ffmpeg like this:

ffmpeg -i input.mp3 -ar 16000 -ac 1 -c:a pcm_s16le output.wav

More audio samples

If you want some extra audio samples to play with, simply run:

make -j samples

This will download a few more audio files from Wikipedia and convert them to 16-bit WAV format via ffmpeg.

You can download and run the other models as follows:

make -j tiny.en

make -j tiny

make -j base.en

make -j base

make -j small.en

make -j small

make -j medium.en

make -j medium

make -j large-v1

make -j large-v2

make -j large-v3

make -j large-v3-turbo

Memory usage

| Model | Disk | Mem |

|---|---|---|

| tiny | 75 MiB | ~273 MB |

| base | 142 MiB | ~388 MB |

| small | 466 MiB | ~852 MB |

| medium | 1.5 GiB | ~2.1 GB |

| large | 2.9 GiB | ~3.9 GB |

POWER VSX Intrinsics

whisper.cpp supports POWER architectures and includes code which

significantly speeds operation on Linux running on POWER9/10, making it

capable of faster-than-realtime transcription on underclocked Raptor

Talos II. Ensure you have a BLAS package installed, and replace the

standard cmake setup with:

# build with GGML_BLAS defined

cmake -B build -DGGML_BLAS=1

cmake --build build -j --config Release

./build/bin/whisper-cli [ .. etc .. ]

Quantization

whisper.cpp supports integer quantization of the Whisper ggml models.

Quantized models require less memory and disk space and depending on the hardware can be processed more efficiently.

Here are the steps for creating and using a quantized model:

# quantize a model with Q5_0 method

cmake -B build

cmake --build build -j --config Release

./build/bin/quantize models/ggml-base.en.bin models/ggml-base.en-q5_0.bin q5_0

# run the examples as usual, specifying the quantized model file

./build/bin/whisper-cli -m models/ggml-base.en-q5_0.bin ./samples/gb0.wav

Core ML support

On Apple Silicon devices, the Encoder inference can be executed on the Apple Neural Engine (ANE) via Core ML. This can result in significant

speed-up - more than x3 faster compared with CPU-only execution. Here are the instructions for generating a Core ML model and using it with whisper.cpp:

-

Install Python dependencies needed for the creation of the Core ML model:

pip install ane_transformers pip install openai-whisper pip install coremltools- To ensure

coremltoolsoperates correctly, please confirm that Xcode is installed and executexcode-select --installto install the command-line tools. - Python 3.11 is recommended.

- MacOS Sonoma (version 14) or newer is recommended, as older versions of MacOS might experience issues with transcription hallucination.

- [OPTIONAL] It is recommended to utilize a Python version management system, such as Miniconda for this step:

- To create an environment, use:

conda create -n py311-whisper python=3.11 -y - To activate the environment, use:

conda activate py311-whisper

- To create an environment, use:

- To ensure

-

Generate a Core ML model. For example, to generate a

base.enmodel, use:./models/generate-coreml-model.sh base.enThis will generate the folder

models/ggml-base.en-encoder.mlmodelc -

Build

whisper.cppwith Core ML support:# using CMake cmake -B build -DWHISPER_COREML=1 cmake --build build -j --config Release -

Run the examples as usual. For example:

$ ./build/bin/whisper-cli -m models/ggml-base.en.bin -f samples/jfk.wav ... whisper_init_state: loading Core ML model from 'models/ggml-base.en-encoder.mlmodelc' whisper_init_state: first run on a device may take a while ... whisper_init_state: Core ML model loaded system_info: n_threads = 4 / 10 | AVX = 0 | AVX2 = 0 | AVX512 = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 0 | VSX = 0 | COREML = 1 | ...The first run on a device is slow, since the ANE service compiles the Core ML model to some device-specific format. Next runs are faster.

For more information about the Core ML implementation please refer to PR #566.

OpenVINO support

On platforms that support OpenVINO, the Encoder inference can be executed on OpenVINO-supported devices including x86 CPUs and Intel GPUs (integrated & discrete).

This can result in significant speedup in encoder performance. Here are the instructions for generating the OpenVINO model and using it with whisper.cpp:

-

First, setup python virtual env. and install python dependencies. Python 3.10 is recommended.

Windows:

cd models python -m venv openvino_conv_env openvino_conv_env\Scripts\activate python -m pip install --upgrade pip pip install -r requirements-openvino.txtLinux and macOS:

cd models python3 -m venv openvino_conv_env source openvino_conv_env/bin/activate python -m pip install --upgrade pip pip install -r requirements-openvino.txt -

Generate an OpenVINO encoder model. For example, to generate a

base.enmodel, use:python convert-whisper-to-openvino.py --model base.enThis will produce ggml-base.en-encoder-openvino.xml/.bin IR model files. It's recommended to relocate these to the same folder as

ggmlmodels, as that is the default location that the OpenVINO extension will search at runtime. -

Build

whisper.cppwith OpenVINO support:Download OpenVINO package from release page. The recommended version to use is 2024.6.0. Ready to use Binaries of the required libraries can be found in the OpenVino Archives

After downloading & extracting package onto your development system, set up required environment by sourcing setupvars script. For example:

Linux:

source /path/to/l_openvino_toolkit_ubuntu22_2023.0.0.10926.b4452d56304_x86_64/setupvars.shWindows (cmd):

C:\Path\To\w_openvino_toolkit_windows_2023.0.0.10926.b4452d56304_x86_64\setupvars.batAnd then build the project using cmake:

cmake -B build -DWHISPER_OPENVINO=1 cmake --build build -j --config Release -

Run the examples as usual. For example:

$ ./build/bin/whisper-cli -m models/ggml-base.en.bin -f samples/jfk.wav ... whisper_ctx_init_openvino_encoder: loading OpenVINO model from 'models/ggml-base.en-encoder-openvino.xml' whisper_ctx_init_openvino_encoder: first run on a device may take a while ... whisper_openvino_init: path_model = models/ggml-base.en-encoder-openvino.xml, device = GPU, cache_dir = models/ggml-base.en-encoder-openvino-cache whisper_ctx_init_openvino_encoder: OpenVINO model loaded system_info: n_threads = 4 / 8 | AVX = 1 | AVX2 = 1 | AVX512 = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 | COREML = 0 | OPENVINO = 1 | ...The first time run on an OpenVINO device is slow, since the OpenVINO framework will compile the IR (Intermediate Representation) model to a device-specific 'blob'. This device-specific blob will get cached for the next run.

For more information about the OpenVINO implementation please refer to PR #1037.

NVIDIA GPU support

With NVIDIA cards the processing of the models is done efficiently on the GPU via cuBLAS and custom CUDA kernels.

First, make sure you have installed cuda: https://developer.nvidia.com/cuda-downloads

Now build whisper.cpp with CUDA support:

cmake -B build -DGGML_CUDA=1

cmake --build build -j --config Release

or for newer NVIDIA GPU's (RTX 5000 series):

cmake -B build -DGGML_CUDA=1 -DCMAKE_CUDA_ARCHITECTURES="86"

cmake --build build -j --config Release

Vulkan GPU support

Cross-vendor solution which allows you to accelerate workload on your GPU. First, make sure your graphics card driver provides support for Vulkan API.

Now build whisper.cpp with Vulkan support:

cmake -B build -DGGML_VULKAN=1

cmake --build build -j --config Release

BLAS CPU support via OpenBLAS

Encoder processing can be accelerated on the CPU via OpenBLAS.

First, make sure you have installed openblas: https://www.openblas.net/

Now build whisper.cpp with OpenBLAS support:

cmake -B build -DGGML_BLAS=1

cmake --build build -j --config Release

Ascend NPU support

Ascend NPU provides inference acceleration via CANN and AI cores.

First, check if your Ascend NPU device is supported:

Verified devices

| Ascend NPU | Status |

|---|---|

| Atlas 300T A2 | Support |

Then, make sure you have installed CANN toolkit . The lasted version of CANN is recommanded.

Now build whisper.cpp with CANN support:

cmake -B build -DGGML_CANN=1

cmake --build build -j --config Release

Run the inference examples as usual, for example:

./build/bin/whisper-cli -f samples/jfk.wav -m models/ggml-base.en.bin -t 8

Notes:

- If you have trouble with Ascend NPU device, please create a issue with [CANN] prefix/tag.

- If you run successfully with your Ascend NPU device, please help update the table

Verified devices.

Moore Threads GPU support

With Moore Threads cards the processing of the models is done efficiently on the GPU via muBLAS and custom MUSA kernels.

First, make sure you have installed MUSA SDK rc4.0.1: https://developer.mthreads.com/sdk/download/musa?equipment=&os=&driverVersion=&version=4.0.1

Now build whisper.cpp with MUSA support:

cmake -B build -DGGML_MUSA=1

cmake --build build -j --config Release

or specify the architecture for your Moore Threads GPU. For example, if you have a MTT S80 GPU, you can specify the architecture as follows:

cmake -B build -DGGML_MUSA=1 -DMUSA_ARCHITECTURES="21"

cmake --build build -j --config Release

FFmpeg support (Linux only)

If you want to support more audio formats (such as Opus and AAC), you can turn on the WHISPER_FFMPEG build flag to enable FFmpeg integration.

First, you need to install required libraries:

# Debian/Ubuntu

sudo apt install libavcodec-dev libavformat-dev libavutil-dev

# RHEL/Fedora

sudo dnf install libavcodec-free-devel libavformat-free-devel libavutil-free-devel

Then you can build the project as follows:

cmake -B build -D WHISPER_FFMPEG=yes

cmake --build build

Run the following example to confirm it's working:

# Convert an audio file to Opus format

ffmpeg -i samples/jfk.wav jfk.opus

# Transcribe the audio file

./build/bin/whisper-cli --model models/ggml-base.en.bin --file jfk.opus

Docker

Prerequisites

- Docker must be installed and running on your system.

- Create a folder to store big models & intermediate files (ex. /whisper/models)

Images

We have two Docker images available for this project:

ghcr.io/ggml-org/whisper.cpp:main: This image includes the main executable file as well ascurlandffmpeg. (platforms:linux/amd64,linux/arm64)ghcr.io/ggml-org/whisper.cpp:main-cuda: Same asmainbut compiled with CUDA support. (platforms:linux/amd64)ghcr.io/ggml-org/whisper.cpp:main-musa: Same asmainbut compiled with MUSA support. (platforms:linux/amd64)

Usage

# download model and persist it in a local folder

docker run -it --rm \

-v path/to/models:/models \

whisper.cpp:main "./models/download-ggml-model.sh base /models"

# transcribe an audio file

docker run -it --rm \

-v path/to/models:/models \

-v path/to/audios:/audios \

whisper.cpp:main "whisper-cli -m /models/ggml-base.bin -f /audios/jfk.wav"

# transcribe an audio file in samples folder

docker run -it --rm \

-v path/to/models:/models \

whisper.cpp:main "whisper-cli -m /models/ggml-base.bin -f ./samples/jfk.wav"

Installing with Conan

You can install pre-built binaries for whisper.cpp or build it from source using Conan. Use the following command:

conan install --requires="whisper-cpp/[*]" --build=missing

For detailed instructions on how to use Conan, please refer to the Conan documentation.

Limitations

- Inference only

Real-time audio input example

This is a naive example of performing real-time inference on audio from your microphone. The stream tool samples the audio every half a second and runs the transcription continuously. More info is available in issue #10. You will need to have sdl2 installed for it to work properly.

cmake -B build -DWHISPER_SDL2=ON

cmake --build build -j --config Release

./build/bin/whisper-stream -m ./models/ggml-base.en.bin -t 8 --step 500 --length 5000

https://user-images.githubusercontent.com/1991296/194935793-76afede7-cfa8-48d8-a80f-28ba83be7d09.mp4

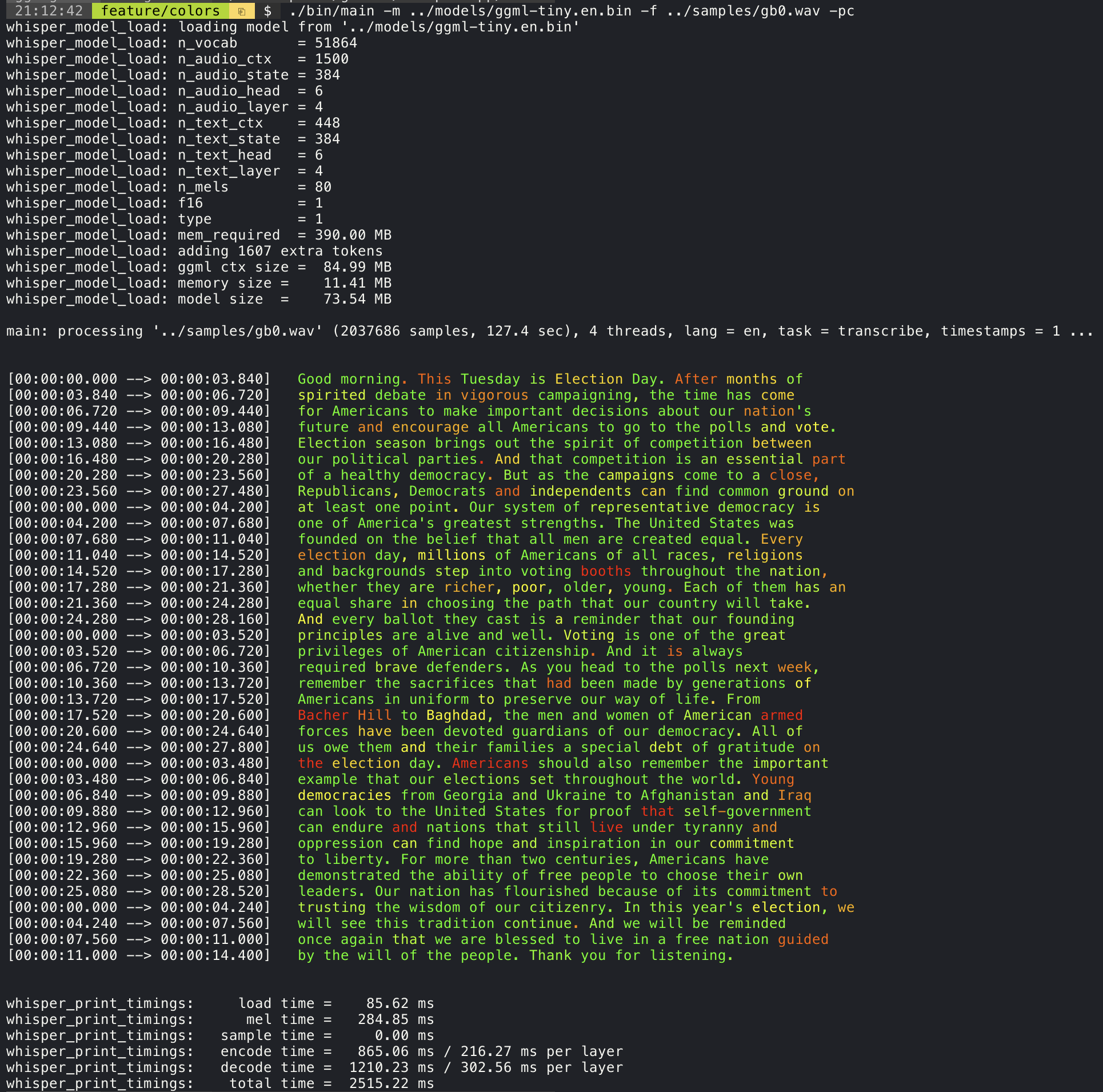

Confidence color-coding

Adding the --print-colors argument will print the transcribed text using an experimental color coding strategy

to highlight words with high or low confidence:

./build/bin/whisper-cli -m models/ggml-base.en.bin -f samples/gb0.wav --print-colors

Controlling the length of the generated text segments (experimental)

For example, to limit the line length to a maximum of 16 characters, simply add -ml 16:

$ ./build/bin/whisper-cli -m ./models/ggml-base.en.bin -f ./samples/jfk.wav -ml 16

whisper_model_load: loading model from './models/ggml-base.en.bin'

...

system_info: n_threads = 4 / 10 | AVX2 = 0 | AVX512 = 0 | NEON = 1 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 1 |

main: processing './samples/jfk.wav' (176000 samples, 11.0 sec), 4 threads, 1 processors, lang = en, task = transcribe, timestamps = 1 ...

[00:00:00.000 --> 00:00:00.850] And so my

[00:00:00.850 --> 00:00:01.590] fellow

[00:00:01.590 --> 00:00:04.140] Americans, ask

[00:00:04.140 --> 00:00:05.660] not what your

[00:00:05.660 --> 00:00:06.840] country can do

[00:00:06.840 --> 00:00:08.430] for you, ask

[00:00:08.430 --> 00:00:09.440] what you can do

[00:00:09.440 --> 00:00:10.020] for your

[00:00:10.020 --> 00:00:11.000] country.

Word-level timestamp (experimental)

The --max-len argument can be used to obtain word-level timestamps. Simply use -ml 1:

$ ./build/bin/whisper-cli -m ./models/ggml-base.en.bin -f ./samples/jfk.wav -ml 1

whisper_model_load: loading model from './models/ggml-base.en.bin'

...

system_info: n_threads = 4 / 10 | AVX2 = 0 | AVX512 = 0 | NEON = 1 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 1 |

main: processing './samples/jfk.wav' (176000 samples, 11.0 sec), 4 threads, 1 processors, lang = en, task = transcribe, timestamps = 1 ...

[00:00:00.000 --> 00:00:00.320]

[00:00:00.320 --> 00:00:00.370] And

[00:00:00.370 --> 00:00:00.690] so

[00:00:00.690 --> 00:00:00.850] my

[00:00:00.850 --> 00:00:01.590] fellow

[00:00:01.590 --> 00:00:02.850] Americans

[00:00:02.850 --> 00:00:03.300] ,

[00:00:03.300 --> 00:00:04.140] ask

[00:00:04.140 --> 00:00:04.990] not

[00:00:04.990 --> 00:00:05.410] what

[00:00:05.410 --> 00:00:05.660] your

[00:00:05.660 --> 00:00:06.260] country

[00:00:06.260 --> 00:00:06.600] can

[00:00:06.600 --> 00:00:06.840] do

[00:00:06.840 --> 00:00:07.010] for

[00:00:07.010 --> 00:00:08.170] you

[00:00:08.170 --> 00:00:08.190] ,

[00:00:08.190 --> 00:00:08.430] ask

[00:00:08.430 --> 00:00:08.910] what

[00:00:08.910 --> 00:00:09.040] you

[00:00:09.040 --> 00:00:09.320] can

[00:00:09.320 --> 00:00:09.440] do

[00:00:09.440 --> 00:00:09.760] for

[00:00:09.760 --> 00:00:10.020] your

[00:00:10.020 --> 00:00:10.510] country

[00:00:10.510 --> 00:00:11.000] .

Speaker segmentation via tinydiarize (experimental)

More information about this approach is available here: https://github.com/ggml-org/whisper.cpp/pull/1058

Sample usage:

# download a tinydiarize compatible model

./models/download-ggml-model.sh small.en-tdrz

# run as usual, adding the "-tdrz" command-line argument

./build/bin/whisper-cli -f ./samples/a13.wav -m ./models/ggml-small.en-tdrz.bin -tdrz

...

main: processing './samples/a13.wav' (480000 samples, 30.0 sec), 4 threads, 1 processors, lang = en, task = transcribe, tdrz = 1, timestamps = 1 ...

...

[00:00:00.000 --> 00:00:03.800] Okay Houston, we've had a problem here. [SPEAKER_TURN]

[00:00:03.800 --> 00:00:06.200] This is Houston. Say again please. [SPEAKER_TURN]

[00:00:06.200 --> 00:00:08.260] Uh Houston we've had a problem.

[00:00:08.260 --> 00:00:11.320] We've had a main beam up on a volt. [SPEAKER_TURN]

[00:00:11.320 --> 00:00:13.820] Roger main beam interval. [SPEAKER_TURN]

[00:00:13.820 --> 00:00:15.100] Uh uh [SPEAKER_TURN]

[00:00:15.100 --> 00:00:18.020] So okay stand, by thirteen we're looking at it. [SPEAKER_TURN]

[00:00:18.020 --> 00:00:25.740] Okay uh right now uh Houston the uh voltage is uh is looking good um.

[00:00:27.620 --> 00:00:29.940] And we had a a pretty large bank or so.

Karaoke-style movie generation (experimental)

The whisper-cli example provides support for output of karaoke-style movies, where the

currently pronounced word is highlighted. Use the -owts argument and run the generated bash script.

This requires to have ffmpeg installed.

Here are a few "typical" examples:

./build/bin/whisper-cli -m ./models/ggml-base.en.bin -f ./samples/jfk.wav -owts

source ./samples/jfk.wav.wts

ffplay ./samples/jfk.wav.mp4

https://user-images.githubusercontent.com/1991296/199337465-dbee4b5e-9aeb-48a3-b1c6-323ac4db5b2c.mp4

./build/bin/whisper-cli -m ./models/ggml-base.en.bin -f ./samples/mm0.wav -owts

source ./samples/mm0.wav.wts

ffplay ./samples/mm0.wav.mp4

https://user-images.githubusercontent.com/1991296/199337504-cc8fd233-0cb7-4920-95f9-4227de3570aa.mp4

./build/bin/whisper-cli -m ./models/ggml-base.en.bin -f ./samples/gb0.wav -owts

source ./samples/gb0.wav.wts

ffplay ./samples/gb0.wav.mp4

https://user-images.githubusercontent.com/1991296/199337538-b7b0c7a3-2753-4a88-a0cd-f28a317987ba.mp4

Video comparison of different models

Use the scripts/bench-wts.sh script to generate a video in the following format:

./scripts/bench-wts.sh samples/jfk.wav

ffplay ./samples/jfk.wav.all.mp4

https://user-images.githubusercontent.com/1991296/223206245-2d36d903-cf8e-4f09-8c3b-eb9f9c39d6fc.mp4

Benchmarks

In order to have an objective comparison of the performance of the inference across different system configurations, use the whisper-bench tool. The tool simply runs the Encoder part of the model and prints how much time it took to execute it. The results are summarized in the following Github issue:

Additionally a script to run whisper.cpp with different models and audio files is provided bench.py.

You can run it with the following command, by default it will run against any standard model in the models folder.

python3 scripts/bench.py -f samples/jfk.wav -t 2,4,8 -p 1,2

It is written in python with the intention of being easy to modify and extend for your benchmarking use case.

It outputs a csv file with the results of the benchmarking.

ggml format

The original models are converted to a custom binary format. This allows to pack everything needed into a single file:

- model parameters

- mel filters

- vocabulary

- weights

You can download the converted models using the models/download-ggml-model.sh script or manually from here:

For more details, see the conversion script models/convert-pt-to-ggml.py or models/README.md.

Bindings

- Rust: tazz4843/whisper-rs | #310

- JavaScript: bindings/javascript | #309

- React Native (iOS / Android): whisper.rn

- Go: bindings/go | #312

- Java:

- Ruby: bindings/ruby | #507

- Objective-C / Swift: ggml-org/whisper.spm | #313

- .NET: | #422

- Python: | #9

- stlukey/whispercpp.py (Cython)

- AIWintermuteAI/whispercpp (Updated fork of aarnphm/whispercpp)

- aarnphm/whispercpp (Pybind11)

- abdeladim-s/pywhispercpp (Pybind11)

- R: bnosac/audio.whisper

- Unity: macoron/whisper.unity

XCFramework

The XCFramework is a precompiled version of the library for iOS, visionOS, tvOS, and macOS. It can be used in Swift projects without the need to compile the library from source. For example, the v1.7.5 version of the XCFramework can be used as follows:

// swift-tools-version: 5.10

// The swift-tools-version declares the minimum version of Swift required to build this package.

import PackageDescription

let package = Package(

name: "Whisper",

targets: [

.executableTarget(

name: "Whisper",

dependencies: [

"WhisperFramework"

]),

.binaryTarget(

name: "WhisperFramework",

url: "https://github.com/ggml-org/whisper.cpp/releases/download/v1.7.5/whisper-v1.7.5-xcframework.zip",

checksum: "c7faeb328620d6012e130f3d705c51a6ea6c995605f2df50f6e1ad68c59c6c4a"

)

]

)

Voice Activity Detection (VAD)

Support for Voice Activity Detection (VAD) can be enabled using the --vad

argument to whisper-cli. In addition to this option a VAD model is also

required.

The way this works is that first the audio samples are passed through the VAD model which will detect speech segments. Using this information the only the speech segments that are detected are extracted from the original audio input and passed to whisper for processing. This reduces the amount of audio data that needs to be processed by whisper and can significantly speed up the transcription process.

The following VAD models are currently supported:

Silero-VAD

Silero-vad is a lightweight VAD model written in Python that is fast and accurate.

Models can be downloaded by running the following command on Linux or MacOS:

$ ./models/download-vad-model.sh silero-v5.1.2

Downloading ggml model silero-v5.1.2 from 'https://huggingface.co/ggml-org/whisper-vad' ...

ggml-silero-v5.1.2.bin 100%[==============================================>] 864.35K --.-KB/s in 0.04s

Done! Model 'silero-v5.1.2' saved in '/path/models/ggml-silero-v5.1.2.bin'

You can now use it like this:

$ ./build/bin/whisper-cli -vm /path/models/ggml-silero-v5.1.2.bin --vad -f samples/jfk.wav -m models/ggml-base.en.bin

And the following command on Windows:

> .\models\download-vad-model.cmd silero-v5.1.2

Downloading vad model silero-v5.1.2...

Done! Model silero-v5.1.2 saved in C:\Users\danie\work\ai\whisper.cpp\ggml-silero-v5.1.2.bin

You can now use it like this:

C:\path\build\bin\Release\whisper-cli.exe -vm C:\path\ggml-silero-v5.1.2.bin --vad -m models/ggml-base.en.bin -f samples\jfk.wav

To see a list of all available models, run the above commands without any arguments.

This model can be also be converted manually to ggml using the following command:

$ python3 -m venv venv && source venv/bin/activate

$ (venv) pip install silero-vad

$ (venv) $ python models/convert-silero-vad-to-ggml.py --output models/silero.bin

Saving GGML Silero-VAD model to models/silero-v5.1.2-ggml.bin

And it can then be used with whisper as follows:

$ ./build/bin/whisper-cli \

--file ./samples/jfk.wav \

--model ./models/ggml-base.en.bin \

--vad \

--vad-model ./models/silero-v5.1.2-ggml.bin

VAD Options

-

--vad-threshold: Threshold probability for speech detection. A probability for a speech segment/frame above this threshold will be considered as speech.

-

--vad-min-speech-duration-ms: Minimum speech duration in milliseconds. Speech segments shorter than this value will be discarded to filter out brief noise or false positives.

-

--vad-min-silence-duration-ms: Minimum silence duration in milliseconds. Silence periods must be at least this long to end a speech segment. Shorter silence periods will be ignored and included as part of the speech.

-

--vad-max-speech-duration-s: Maximum speech duration in seconds. Speech segments longer than this will be automatically split into multiple segments at silence points exceeding 98ms to prevent excessively long segments.

-

--vad-speech-pad-ms: Speech padding in milliseconds. Adds this amount of padding before and after each detected speech segment to avoid cutting off speech edges.

-

--vad-samples-overlap: Amount of audio to extend from each speech segment into the next one, in seconds (e.g., 0.10 = 100ms overlap). This ensures speech isn't cut off abruptly between segments when they're concatenated together.

Examples

There are various examples of using the library for different projects in the examples folder. Some of the examples are even ported to run in the browser using WebAssembly. Check them out!

| Example | Web | Description |

|---|---|---|

| whisper-cli | whisper.wasm | Tool for translating and transcribing audio using Whisper |

| whisper-bench | bench.wasm | Benchmark the performance of Whisper on your machine |

| whisper-stream | stream.wasm | Real-time transcription of raw microphone capture |

| whisper-command | command.wasm | Basic voice assistant example for receiving voice commands from the mic |

| whisper-server | HTTP transcription server with OAI-like API | |

| whisper-talk-llama | Talk with a LLaMA bot | |

| whisper.objc | iOS mobile application using whisper.cpp | |

| whisper.swiftui | SwiftUI iOS / macOS application using whisper.cpp | |

| whisper.android | Android mobile application using whisper.cpp | |

| whisper.nvim | Speech-to-text plugin for Neovim | |

| generate-karaoke.sh | Helper script to easily generate a karaoke video of raw audio capture | |

| livestream.sh | Livestream audio transcription | |

| yt-wsp.sh | Download + transcribe and/or translate any VOD (original) | |

| wchess | wchess.wasm | Voice-controlled chess |

Discussions

If you have any kind of feedback about this project feel free to use the Discussions section and open a new topic.

You can use the Show and tell category

to share your own projects that use whisper.cpp. If you have a question, make sure to check the

Frequently asked questions (#126) discussion.

Top Related Projects

Robust Speech Recognition via Large-Scale Weak Supervision

Port of OpenAI's Whisper model in C/C++

WhisperX: Automatic Speech Recognition with Word-level Timestamps (& Diarization)

High-performance GPGPU inference of OpenAI's Whisper automatic speech recognition (ASR) model

Faster Whisper transcription with CTranslate2

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot